Hi

Welcome (back) to The Prompt. AI isn’t well understood, but we learn a lot in our work that can help. In this newsletter, we share some of those learnings with you. If you find them helpful, make sure you’re signed up for the next issue. You have choices here – to receive our weekly US newsletter, our monthly EU newsletter, or if you really want to load up, then both.

[News] A proposal to harmonize US AI regulation

The US faces an increasingly urgent choice on AI: set clear national standards, or risk a patchwork of state rules – some subset of the 1,000 wending their way through state legislatures this year – that slow innovation without improving safety. Imagine how hard it would have been to win the Space Race if California’s aerospace and tech industries got tangled up in state-by-state regulations impeding the innovation of transistor technology.

That’s why we’re calling for a different approach, having companies adhere to federal and global safety guidelines while creating a national model for other states to follow. We just became one of the first LLM companies to commit to working with the US government and its Center for AI Standards and Innovation (CAISI) to conduct evaluations of frontier models’ national security-related capabilities.

This week, we’ve sent a letter to Gov. Gavin Newsom urging that California provide a path for states to harmonize their AI regulations with national – and by virtue of US leadership, emerging global – standards.

In our letter, we call for avoiding duplication and inconsistencies between state requirements and the safety frameworks already being advanced by the US government and our democratic allies. In particular, we urge California to see frontier model developers as compliant with state requirements when they enter into a safety-oriented agreement with a relevant US federal government agency, like CAISI, or when they sign onto a parallel regulatory framework like the EU’s Code of Practice (which we also signed).

As importantly, we urge the state to keep supporting smaller developers and startups by, for example, exempting them from state compliance requirements that bigger companies are better equipped to bear. We don’t want to inadvertently create a “California Environmental Quality Act (CEQA) for AI innovation” that would result in California dropping from leading in AI to lagging behind other states or even countries (CEQA being a 1970 state law that effectively knocked California from leading the country in housing policy to being basically unable to build housing because its impacts weren’t understood).

Aligning California with the standards being adopted by the US government will help ensure that the state is supporting the strategic imperative to build on US-led, democratic AI and not autocratic AI. The AI companies of the communist-led People’s Republic of China (PRC) aren’t likely to abide by our state laws – and actually will benefit from patchwork regulations that bog down their US competitors in inconsistent standards.

We continue to maintain that an approach based on clear federal rules will spur innovation, level the playing field for start-ups, and maintain America’s edge over the PRC. In the Intelligence Age, clarity on AI regulation isn’t optional – it’s essential.

[Insight] Outlook on China’s full-stack playbook

China’s progress on its AI state industrial policy reflects a multi-decade playbook honed through its successes with EVs, solar and 5G – as Beijing aims for full-stack self-sufficiency and global leadership on AI by 2030.

Overall, China’s approach is patient, comprehensive, and asymmetric where it needs to be. We expect intensified efforts in H2 2025 across the AI full stack, with emphasis on closing the compute gap while exploiting cost, infrastructure, energy availability, and advantages through global market-access and regulatory arbitrage, i.e., PRC AI companies’ ability to benefit from regulatory arbitrage being created by individual US states, which are easier to enforce with domestic AI companies than PRC-based companies. Here’s a breakdown:

Massive state investment: Government funding for AI and related infrastructure surged to ~$290 billion in H1 2025, layered on top of ongoing national/provincial/municipal subsidies and tax breaks. (State investment is a key element for which we don’t see much publicly available tracking.)

Persisting compute gap: According to widely cited figures, China’s compute capacity is ~20% of the US’s, with chronic bottlenecks in advanced lithography and electronic design automation tools (design software for the most advanced microchips), despite more progress in domestic chips (e.g., Huawei Ascend 910C, Kunlun P800). However, China is finding export control workarounds including overseas data centers, cloud access, and gray- and black-market chips.

Going global: China is leveraging its ties to 150+ countries and existing telecom infrastructure from its Belt & Road initiative to bundle AI services abroad—especially in Southeast Asia and the Middle East—with potential “lock-in” through standards, hosting, and service contracts.

Leadership, norms and standards push: China is proposing a World AI Cooperation Organization, their version of the democratic global framework that’s already emerging through the US CAISI, the UK AISI and the EU’s Code of Practice, and working aggressively through UN channels in line with Chinese norms and standards on issues like facial recognition.

IP acquisition and espionage: Ongoing cyber and human intelligence collection targeting AI chips, Dynamic Random Access Memory, lithography, and model IP; distillation and other gray-area tactics used to close performance gaps.

And here’s what to watch for:

China advancing 7-nm chips and pushing toward 4-nm: Smaller chips run faster and use less power, letting China build stronger AI at home and rely less on foreign suppliers. Hitting 4-nm is hard because it normally needs advanced lithography tools China can’t easily buy; doing it without them adds many extra steps, raises costs, and lowers yields, and also requires cutting-edge design tools, materials, and packaging.

Subsidized “inference dumping” (making AI usage ultra-cheap i.e. on APIs): If each AI answer costs pennies, users and developers flock in, which can starve rivals of traffic and data and set de facto standards. This works when the state helps with cheap compute, domestic chips, and large data-center capacity so providers can keep prices down.

Bundled “good-enough” AI for the Global South with built-in lock-in: Packaging cloud, models, services, support, and financing makes adoption easy for governments and state firms. Once core systems sit on one vendor’s stack, switching becomes costly, spreading that vendor’s standards and influence.

Concentrated state backing for chosen champions: Direct funding, priority access to compute, procurement deals, and regulatory support let a few firms scale quality and market share quickly. That accelerates product maturity at home and abroad and nudges the ecosystem to standardize around their toolchains.

Foundational knowledge for the above from the following sources; conclusions original to OpenAI:

Chan, Kyle, Gregory Smith, Jimmy Goodrich, Gerard DiPippo, and Konstantin F. Pilz. 2025. “Full Stack: China’s Evolving Industrial Policy for AI.” Expert Insights, RAND Corporation, June 26.

Chang, Wendy, Rebecca Arcesati, and Antonia Hmaidi. 2025. China’s Drive toward Self-Reliance in Artificial Intelligence: From Chips to Large Language Models. MERICS, July 22, 2025.

Nestor Maslej, et al. 2025. Artificial Intelligence Index Report 2025. Institute for Human-Centered Artificial Intelligence, Stanford University. Published April 7, 2025.

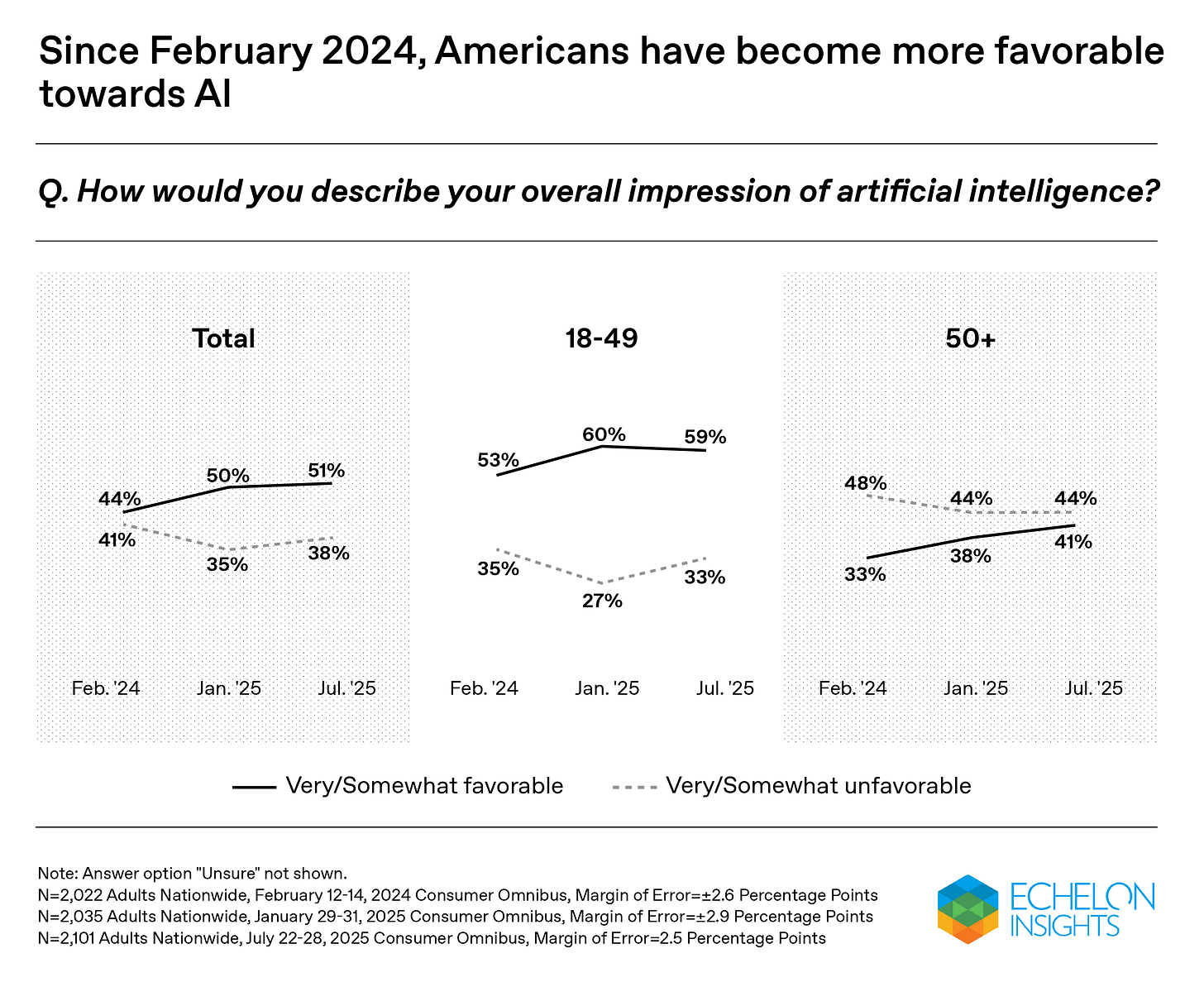

[Data] Americans get more comfortable with AI

These results from a recent Echelon Insights survey (July 22-28, 2,101 adults, +/- 2.5%) caught our eye: US adults have become more favorable to AI over the past 18 months, including among older Americans.

Overall, 51% of adults have a favorable view of AI, versus 38% who have an unfavorable view (+13) – up from 44% favorable, 41% unfavorable (+3) in Echelon’s Feb. 2024 poll. Strikingly, adults ages 50 and older have gone from 33% favorable, 48% unfavorable (-15) in Feb. 2024, to 41% favorable, 44% unfavorable (-3).

“As AI has become more and more prevalent in people's lives, Americans are feeling more warmly toward it,” said Kristen Soltis Anderson of Echelon Insights. “While I expect these numbers are still movable in either direction, it is a great sign for the AI industry that Americans are warming to this technology.”

[About] OpenAI Academy

The Academy is OpenAI’s free online and in-person AI literacy training program for beginners through experts.

[Disclosure]

Prompt graphics created by Base Three using ChatGPT.