DeepSeek at 1

AI for DC

Hi

Welcome (back) to The Prompt. AI isn’t well understood, but we learn a lot in our work that can help. In this newsletter, we share some of these learnings with you. If you find them helpful, make sure you’re signed up for the next issue.

[Insight] The DeepSeek moment at 1

A year after DeepSeek-R1 jolted the AI race, the US/China outlook is complex and challenging to forecast due to many variables at play. Here’s what we know today, subject to change – or even another seismic shock like DeepSeek’s first release brought. (Which, if it happens, could be on the hardware side.)

The US continues to lead on model capabilities, and US models also have maintained a meaningful lead in science and more complex reasoning. But China has made real progress in deploying models widely and cheaply.

On benchmarks, Chinese systems made up ground quickly in 2024, but despite headlines following DeepSeek-R1’s release a year ago, China’s progress in 2025 was uneven as US export controls limited access to the computing power needed to train and run frontier models.

What has changed more decisively is depth and deployability: China now has a broad field of near-frontier models, many of them open-weight and aggressively priced, making them easier to deploy across industries and government systems.

Usage data reflects that shift. On OpenRouter, Chinese open-source models grew from 1.2% to nearly 30% of weekly activity at their 2025 peak. Inside China, state-backed firms such as Z.ai (formerly Zhipu AI) are rolling large models into public-sector workflows, and Z.ai’s IPO last week in Hong Kong signals Beijing’s willingness to turn model builders into national infrastructure.

Yet Chinese constraints are real. Training delays, outages, and delayed next-generation releases point to a persistent bottleneck: compute. Demand for advanced US chips from Chinese AI companies remains high, underscoring how difficult it is to scale frontier models without reliable access to cutting-edge hardware. Meanwhile, export controls on US chips, and Chinese government views on imports of US chips continue to evolve.

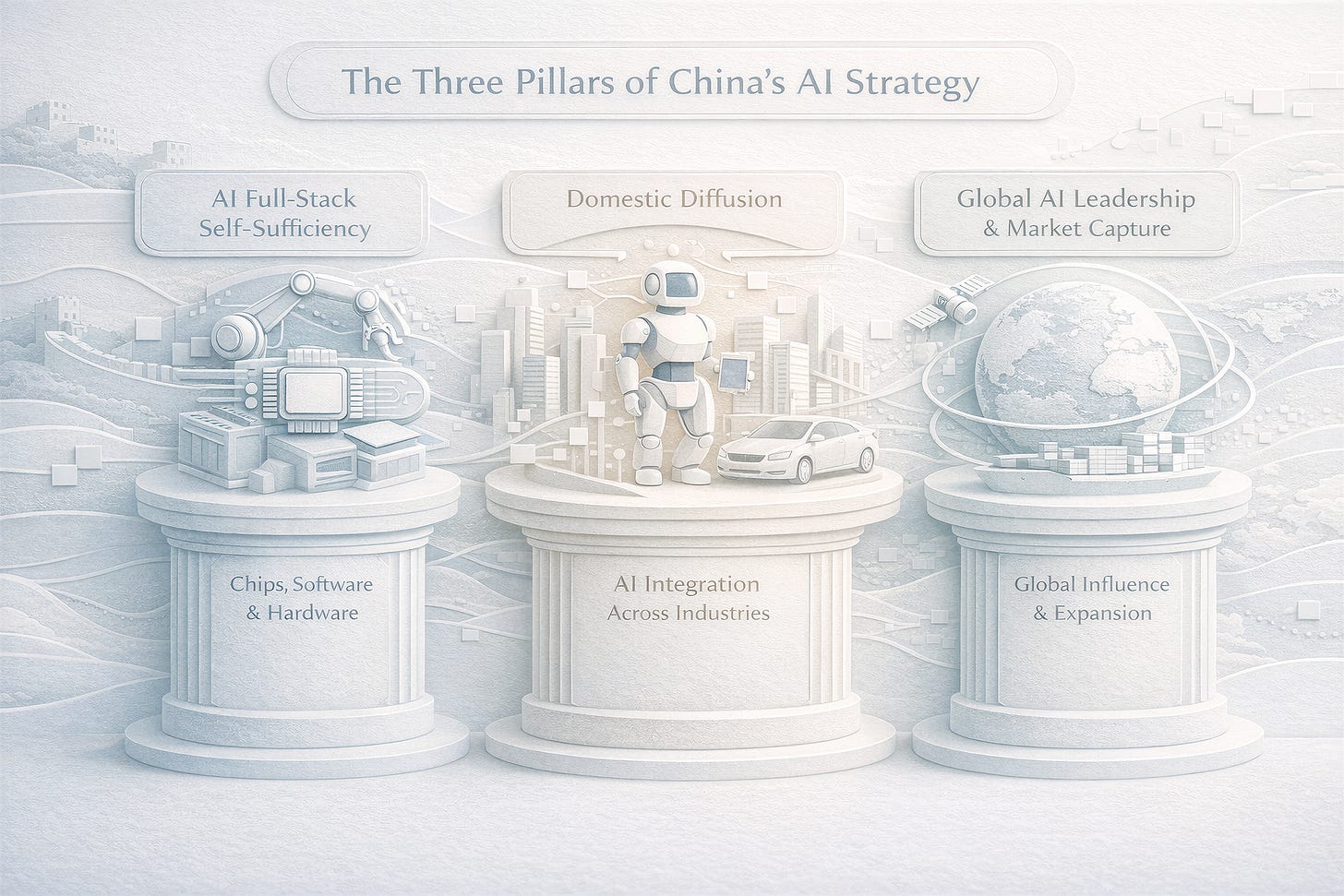

Beijing’s strategy has solidified around three ideas: 1) build a domestic AI stack; 2) push it everywhere at home (including as a key pillar of the People’s Liberation Army’s military modernization); and 3) export it abroad. Open-source releases from companies like Alibaba, updated in weeks rather than months, are designed to make Chinese models the default for local developers. At the same time, China is packaging models, cloud infrastructure, and hardware for overseas partners, testing “sovereign AI” stacks from Southeast Asia to the Middle East.

The real contest in 2026 will turn on three questions.

1. Can US models extend their lead in real-world usefulness? The 2026 “gap” question increasingly turns on which AI ecosystem converts models into economically meaningful work. Western platforms such as OpenAI’s Codex still lead in deployed agentic coding and workflow tools, even as Chinese models continue closing the gap.

2. Can China build enough compute? Export controls have historically limited China’s access to advanced chips and memory as US hyperscalers have continued to expand data-center capacity. Scaling fast enough to close that gap remains a challenge. At the same time, the CCP is reportedly limiting the use of Western chips in new data centers and subsidizing Chinese chips, doubling down on self-reliance for a full AI stack.

3. Can China deploy at scale? Much of Beijing’s AI+ agenda and related incentives aim to accelerate diffusion by pushing AI into priority sectors through procurement, standards, and subsidies. Results will hinge on whether AI+ drives productivity advances in manufacturing and other key sectors, while creating a product ecosystem comparable to that in the US. Internationally, China is bundling infrastructure, cloud platforms, models, and domestic hardware to foster reliance on their stacks. – OpenAI’s Intelligence & Investigations Team

[News] Our 2026 election prep

Two years ago, voters went to the polls in the first elections held with widely available generative AI, and OpenAI worked to detect and combat the misuse of our tools. We documented and disrupted several influence operations, including from foreign actors, and we shared our published findings with government officials and key stakeholders.

Beyond our own services, we publish detailed public reporting on the influence operations and coordinated abuse we uncover, which helps other platforms and stakeholders find and shut down related activity across the internet. We also released SafeBerry, an open-source safety model that lets organizations use AI to detect and stop misuse inside their own systems.

As the United States and other countries head into another major election year, the challenge is how to use AI to expand participation, lower barriers to entry, and give voters better access to information while reducing real risks of abuse. AI has advanced rapidly since 2024. OpenAI’s products now integrate more powerful web search, image and video generation, as well as a suite of new browser-based experiences and autonomous agents. These tools can all be used to help people better participate in civic life, but they can also be misused.

OpenAI continues to prohibit ChatGPT from being used for campaign activity and persuasion. At the same time, we can see how, as our tools become more advanced, they could support a healthy, open civic environment and help lesser-resourced candidates and organizations reach voters. Lesser-funded candidates could, for example, make their own ads instead of paying hefty commissions to high-priced political consultants. As our tools and technology evolve, we recognize that our policies may need to evolve, as well.

We have product safeguards that help limit abuse using depictions of public figures. We include a digital fingerprint (C2PA data), so people know that an image was generated using AI. And we prohibit any use of our products to deceive people about whether an image or video depicts real-world events, or to carry out election interference, demobilization activities, or impersonation.

Behind the scenes, dedicated teams monitor for coordinated abuse, investigate emerging threats, and restrict or ban accounts that violate our rules.

Our goal is to help voters get accurate and objective information. In the weeks ahead, we plan to outline how we’re working across stakeholders to address deepfakes, surface accurate voting information, and ensure rapid-response systems are in place for major elections in 2026.

[AI Economics] Labor market update

Last Friday, the Department of Labor released the December jobs report. Here are a few takeaways from our Economic Research team, particularly as they relate to AI:

The unemployment rate of 4.4%, which is slightly lower than in the prior release, suggests the labor market is cooling but not collapsing. Unemployment remains low by historical standards (the 30-year average is 5.5%).

The addition of just 50,000 jobs in December indicates that hiring momentum has slowed. Private-sector employment grew by 584,000 jobs in 2025, an average of about 49,000 jobs per month, which represents a sharp slowdown from 2024, when employment grew by about 2 million jobs or 168,000 per month. The labor market is therefore still expanding but at a much slower pace than in the immediate post-pandemic period.

The unemployment rate for workers ages 16-24 is now 10.4%, up almost 4 points from 6.6% in mid-2023, sustaining pressure on early-career jobs. The rate for prime-age workers (ages 25-54) has been stable over the past year.

What forecasters expect for 2026:

Most mainstream economic forecasts project that the labor market will remain largely unchanged in 2026. These forecasts generally place the unemployment rate in the mid-4% range next year. Most forecasters also expect continued slow but positive employment growth.

The relative stability in forecasts suggest that while AI is already changing tasks inside roles, the translation of these changes into aggregate employment is expected to be more gradual. This is in part because diffusion takes time and sectors with the most employees such as healthcare and education, have historically been more protected from large, technologically-based shifts in hiring. – OpenAI Economic Research

[Event] Navigating healthcare with AI

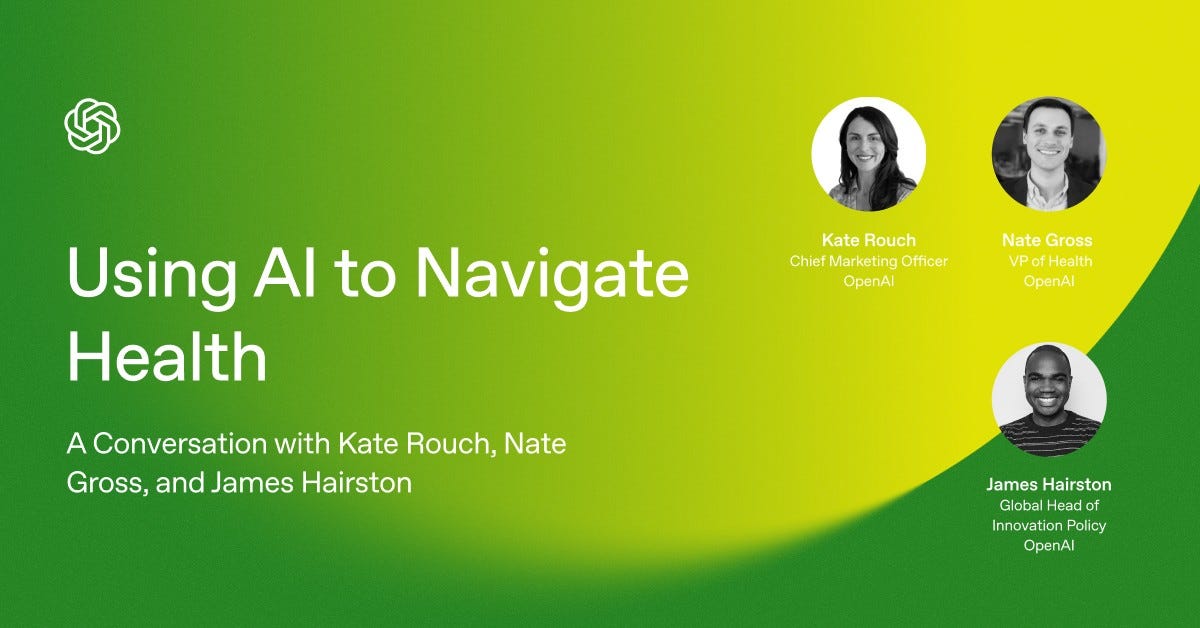

Furthering our data and stories about how millions are using ChatGPT to navigate questions about their healthcare, the OpenAI Forum this Thursday is hosting a talk on this timely topic. Register here to attend this discussion featuring OpenAI Chief Marketing Officer Kate Rouch; Nate Gross, who leads the healthcare initiative at OpenAI; and James Hairston, OpenAI’s global head of innovation policy.

4:45 PM - 5:25 PM ET on JAN 15

[About] Introducing OpenAI Academy for news orgs

The Prompt’s bench of authors and contributors is loaded up with former journalists, and we routinely think about how we would have incorporated AI and ChatGPT into our old jobs. So we’re extra psyched about the launch of OpenAI Academy for News Organizations, in partnership with the American Journalism Project and The Lenfest Institute for Journalism. It’s a hub for journalists, editors, and publishers using AI.

At the outset, this Academy will provide on-demand training (including “AI Essentials for Journalists”), practical use cases, open-source projects, and guidance on responsible uses.

[Disclosure]

Graphics created by Base Three using ChatGPT.